The Unseen Hand: What is Algorithmic Bias in Your VibeWear?

Ever feel like a 'smart' system just doesn't quite 'get' you? Or worse, misinterprets your signals entirely? VibeWear promises to mirror your mood. Yet, an invisible factor – algorithmic bias – can warp that reflection. Algorithms train on data. Imperfect data yields an imperfect system. Plain truth.

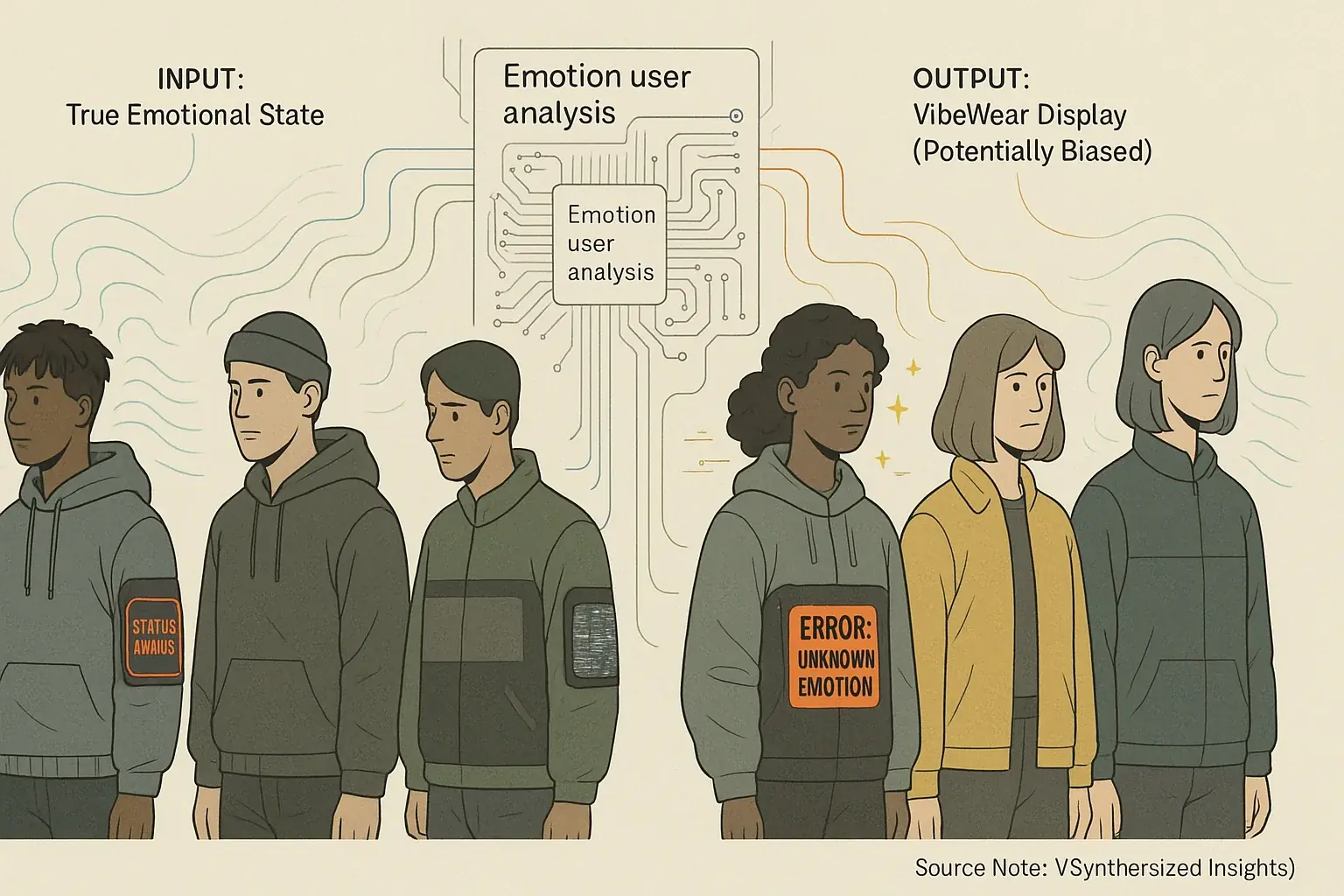

What is the real impact for VibeWear users? Our analysis suggests patterns where garments might consistently flag 'anxious' during deep concentration. Or a system could misread your unique cultural expressions. This goes beyond simple irritation. It erodes authentic self-expression. It damages trust in the technology. If VibeWear's emotion detection systems harbor bias, they fail their primary function: genuine representation.

This issue transcends mere technical error. It poses a sophisticated ethical puzzle for adaptive fashion's evolution. We are scrutinizing fairness in how technology perceives human emotion. Your VibeWear should genuinely echo your internal state, not a distorted algorithmic guess. Exploring bias origins and mitigation strategies is vital.

The Roots of Misunderstanding: Where Does Bias Creep In?

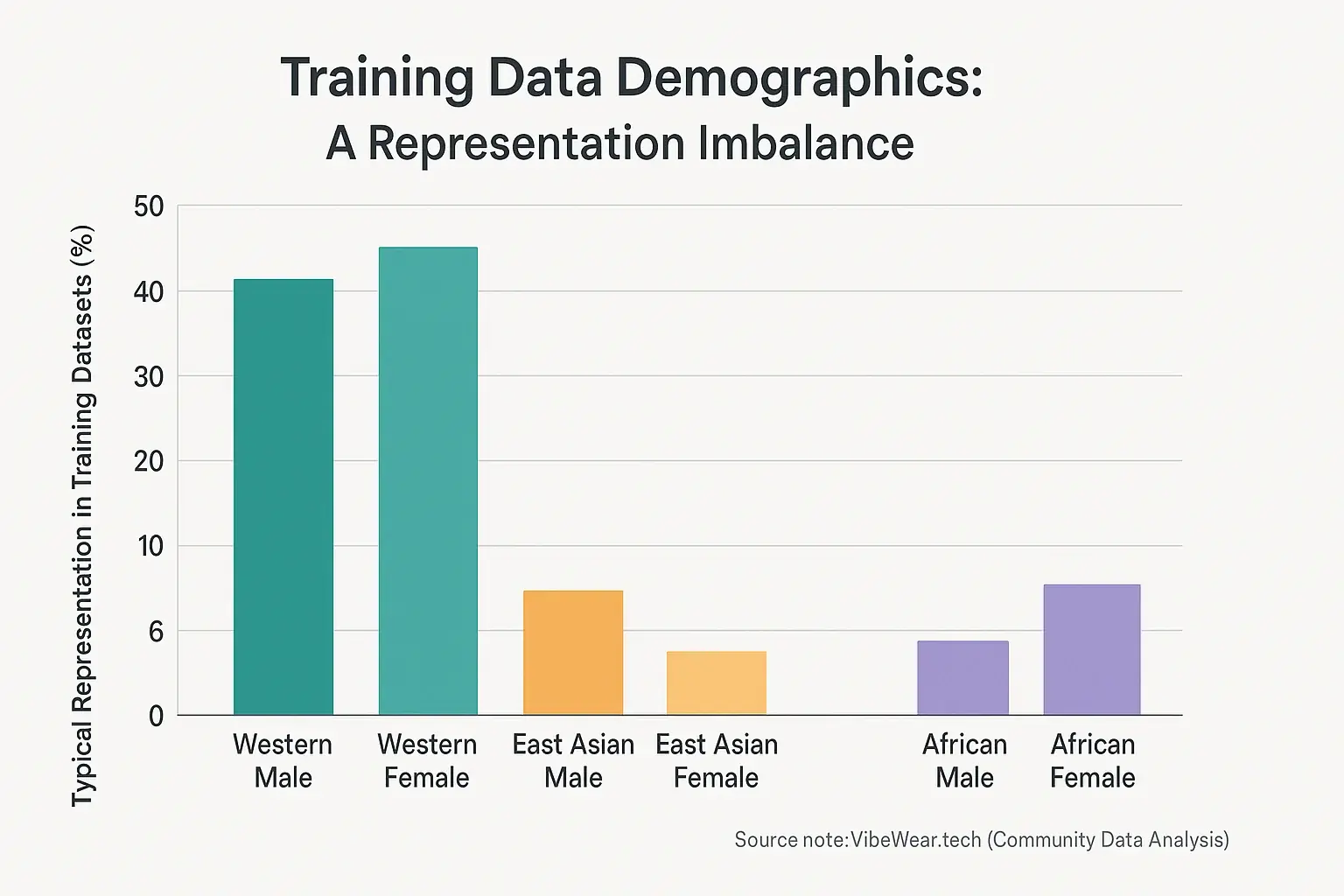

Algorithms mirror their training data. Purely. VibeWear’s emotion recognition systems learn from these crucial inputs. Yet, many existing facial emotion datasets suffer significant imbalances. Research indicates these datasets often overemphasize Caucasian individuals, as noted in multiple studies . Other ethnic groups, such as Asians or Africans, remain underrepresented . This data skew directly impacts performance with diverse users. The problem? Systemic. Not malicious intent. Our VibeWear analysis points to deep issues in data collection practices.

Emotional expression is not universal. That's an unspoken truth we see at VibeWear. Different cultures possess distinct display rules for public emotional displays . So, what happens? A 'neutral' face in one culture might register as 'bored' to an algorithm trained on different norms . This occurs if the system learned primarily from another culture's expressions. Your VibeWear could then misread genuine feelings. Cultural context is key. Imagine a user from a culture valuing emotional moderation, like some East Asian cultures . Their VibeWear might constantly signal 'unhappiness'. They are perfectly content. This mismatch erodes trust. Quickly.

Algorithmic design itself can embed bias. Beyond just data. How an algorithm is constructed fundamentally matters. What we've observed in the field of user process ethics is that prioritized 'features' or optimization methods can inadvertently introduce skewed views. For instance, a system might overvalue subtle facial micro-expressions. It could then undervalue broader physiological signals from VibeWear's sensors. The result? It might miss crucial emotional cues from individuals with naturally less animated faces.

Deployed biased systems can create a damaging feedback loop. Incorrect interpretations may feed back into the system's learning. The algorithm then treats these errors as confirmed truth. This entrenches the original bias further. VibeWear understands breaking this cycle needs persistent, conscious effort. Design. Refinement. Always.

Fighting the Skew: Strategies for Fairer VibeWear Algorithms

The good news? Algorithmic bias is not an unsolvable problem. Developers and designers possess powerful tools. These tools help build fairer, more equitable VibeWear systems. Actively sourcing truly diverse training data is one crucial step. This data must cover wide demographics, varied cultural expressions, and many emotional nuances. This means going beyond easy datasets. VibeWear's commitment extends to these deep data practices.

Another vital approach involves building transparency directly into the system. You should understand how your VibeWear interprets mood. VibeWear provides clear explanations. You must have options to calibrate or correct its interpretations. Imagine a simple in-app feature. This feature lets you teach your VibeWear your unique expressions. You could also opt-out of certain data processing paths. This returns power to you. Many early smart tech adopters feel black boxes judge them. VibeWear's goal is a transparent partner. It aids your self-expression.

Ongoing vigilance is essential past initial design. This means continuous bias testing for VibeWear technology. Developers use fairness metrics. These metrics evaluate performance across different user groups, ensuring equitable outcomes. A commitment to regular updates is also necessary for maintaining this fairness. It is an ongoing process. Not a one-time fix. Ethical guidelines steer this development journey, forming the core of VibeWear's approach to responsible innovation.

You, as users, also play an important part. Demand transparency from adaptive fashion brands. Provide your feedback on experiences. Support brands committed to ethical community feedback development and fair practices. Your collective voice can shape adaptive fashion. It can forge a fairer future for everyone.